[01/2026] Congratulations To Dr. WANG Nizhuan On His Invited Talk At IECBS-IECNS 2026 !

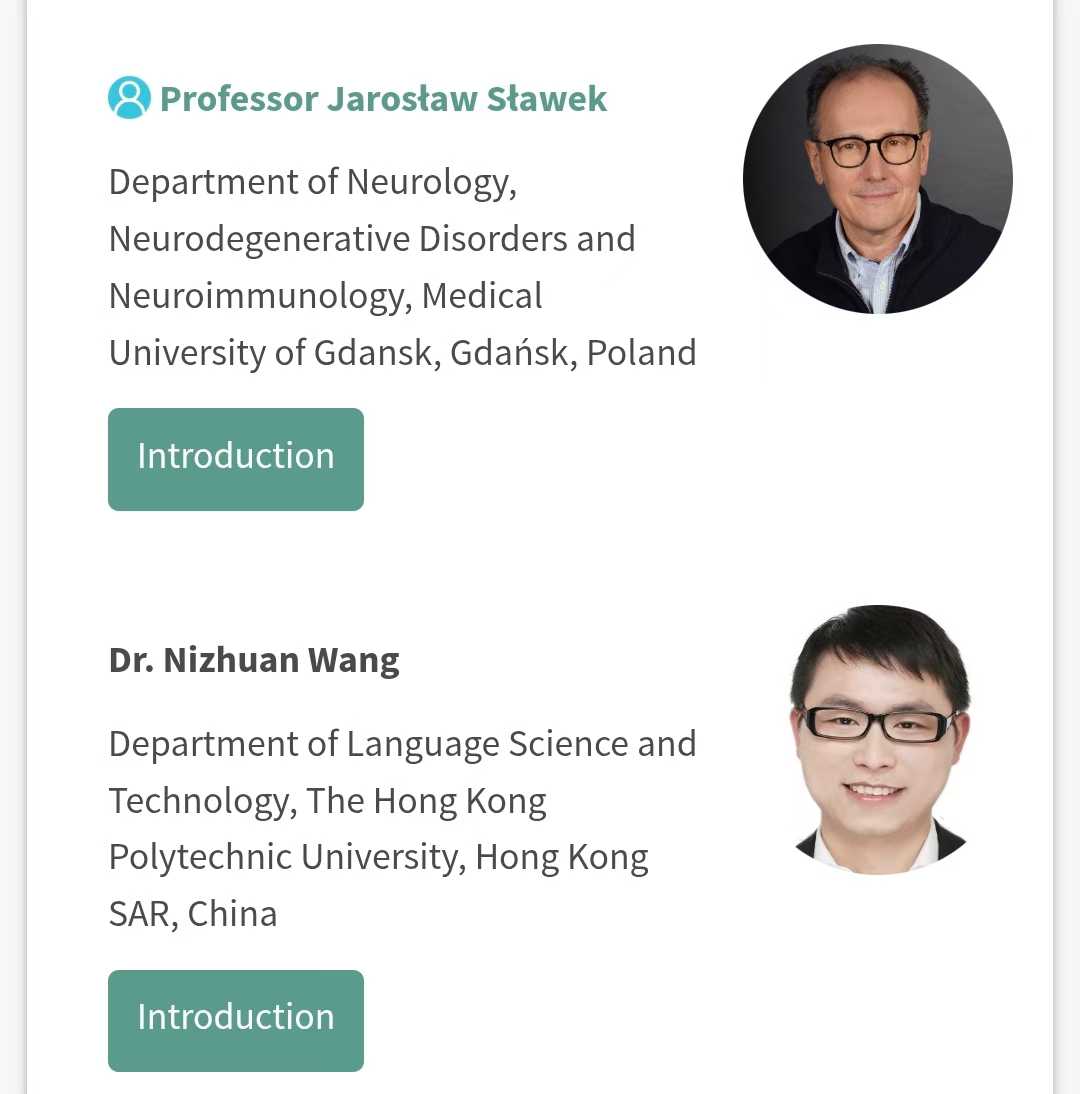

It is delighted to announce that Dr. WANG Nizhuan has been warmly invited by Prof. Woon-Man Kung to deliver an invited talk at The 5th International Electronic Conference on Brain Sciences & 1st International Electronic Conference on Neurosciences (IECBS-IECNS 2026), which will be held online on March 9–11, 2026.

During the conference, Dr. WANG Nizhuan will present a comprehensive analysis to experts, scholars, and colleagues worldwide, highlighting the current landscape, key challenges, and future directions of single-channel EEG-based brain-computer interfaces.

The talk title is: “Single-Channel EEG-Based Brain-Computer Interfaces: Current Landscape and Future Directions”. He looks forward to meeting everyone at IECBS-IECNS 2026.

For more information about the conference and the speaker session, please visit: https://sciforum.net/event/IECBS-IECNS2026?section=#event_speakers

[12/2025] Congratulations to Lei’s paper is accepted by Visual Computing for Industry, Biomedicine, and Art!

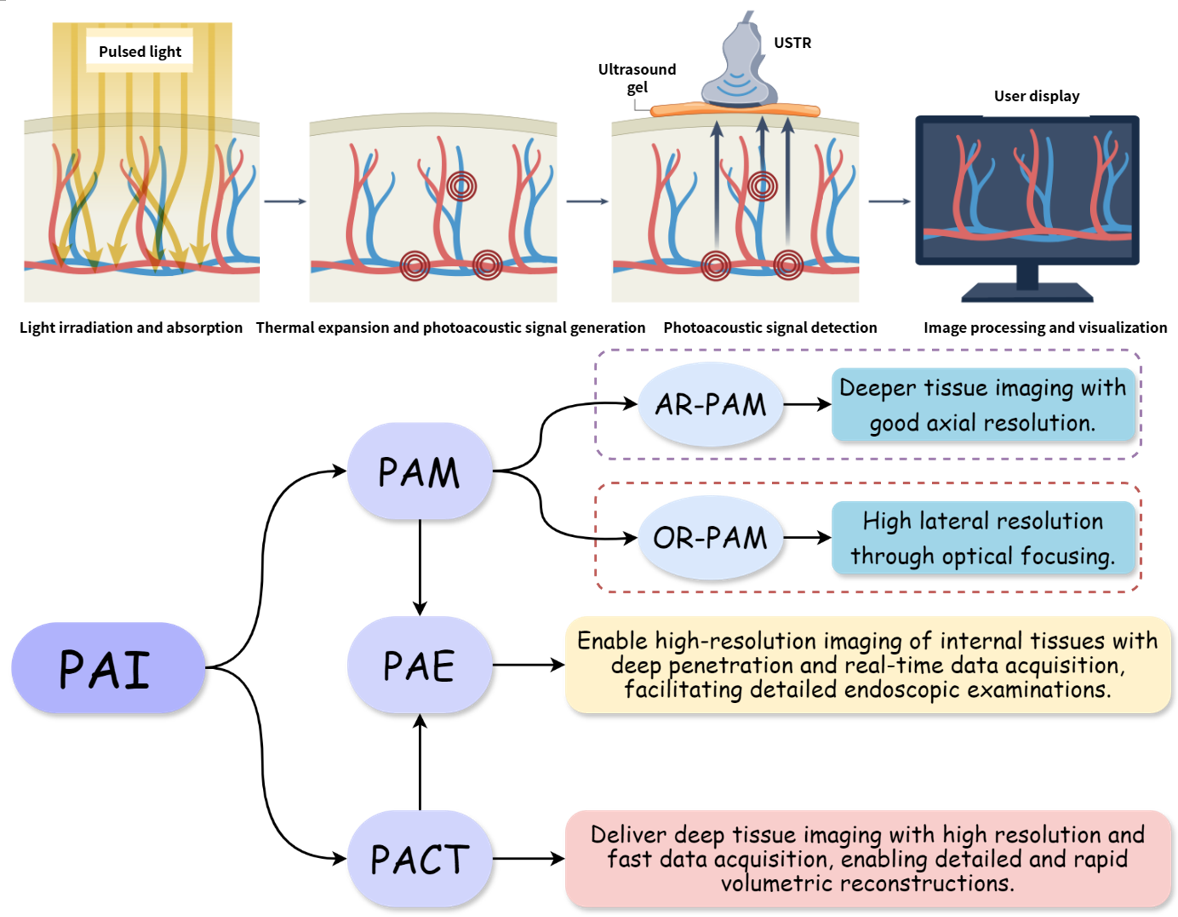

Lei Wang, Weiming Zeng, Kai Long, Hongyu Chen, Rongfeng Lan, Li Liu, Wai Ting Siok, Nizhuan Wang. Advances in Photoacoustic Imaging Reconstruction and Quantitative Analysis for Biomedical Applications [J]. Visual Computing for Industry, Biomedicine, and Art, 2025.

Abstract:

Photoacoustic imaging (PAI), a modality that combines the high contrast of optical imaging with the deep penetration of ultrasound, is rapidly transitioning from preclinical research to clinical practice. However, its widespread clinical adoption faces challenges such as the inherent trade-off between penetration depth and spatial resolution, along with the demand for faster imaging speeds. This review comprehensively examines the fundamental principles of PAI, focusing on three primary implementations: photoacoustic computed tomography (PACT), photoacoustic microscopy (PAM), and photoacoustic endoscopy (PAE). It critically analyzes their respective advantages and limitations to provide insights into practical applications. The discussion then extends to recent advancements in image reconstruction and artifact suppression, where both conventional and deep learning (DL)-based approaches have been highlighted for their role in enhancing image quality and streamlining workflows. Furthermore, this work explores progress in quantitative PAI, particularly its ability to precisely measure hemoglobin concentration, oxygen saturation, and other physiological biomarkers. Finally, this review outlines emerging trends and future directions, underscoring the transformative potential of DL in shaping the clinical evolution of PAI.

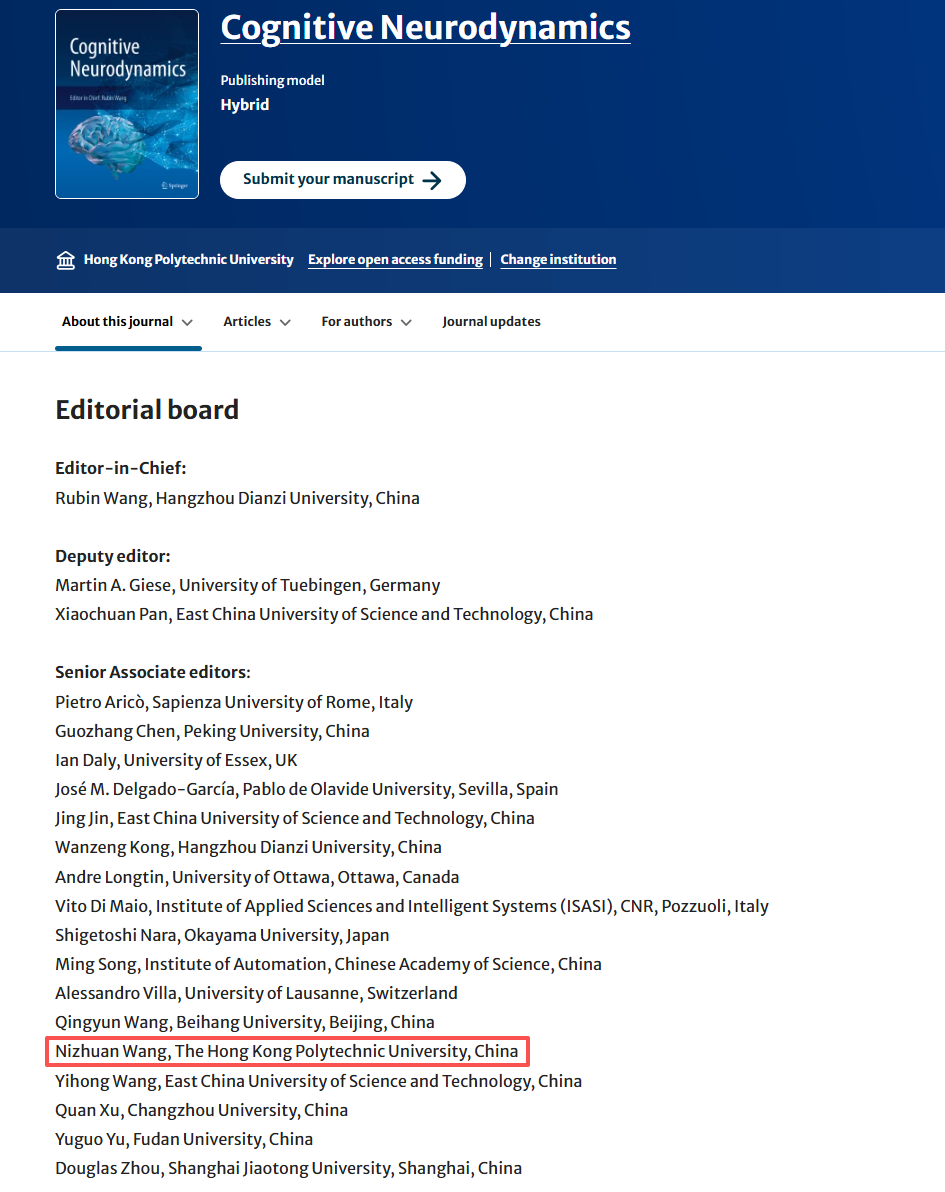

[12/2025] Congratulations to Dr. WANG Nizhuan on His Election as Senior Associate Editor of Cognitive Neurodynamics

It is delighted to announce that Dr. Wang Nizhuan has been elected as Senior Associate Editor of Cognitive Neurodynamics (CODY), a prestigious hybrid journal published by Springer Nature.

Founded in 2007, Cognitive Neurodynamics has established itself as a key academic platform in related fields. It currently holds a latest impact factor of 3.9 and is ranked Q2 in the Journal Citation Reports (JCR). The journal focuses on cutting-edge research areas including cognitive neuroscience, brain-computer interfaces, and computational neuroscience, providing a vital forum for scholars worldwide to exchange innovative ideas and findings.

For more information about the journal and its editorial board, please visit: https://link.springer.com/journal/11571/editorial-board.

[10/2025] Congratulations !

Dr. WANG Nizhuan has been invited to deliver a plenary address at the 2025 International Neural Regeneration Symposium (INRS2025), held from October 24-26, 2025. His presentation, titled “From Neural Mechanisms to Clinical Diagnosis: Decoding Brain Disorders via AI-powered Neuroimaging,” will showcase his pioneering research at the intersection of AI, neuroimaging and brain disorders.

[08/2025] One paper is accepted to MIND2025 (Oral) !

Yueyang Li, Shengyu Gong, Weiming Zeng, Nizhuan Wang, Wai Ting Siok. FreqDGT: Frequency-Adaptive Dynamic Graph Networks with Transformer for Cross-subject EEG Emotion Recognition. The 2025 International Conference on Machine Intelligence and Nature-InspireD Computing (MIND).

Abstract:

Electroencephalography (EEG) serves as a reliable and objective signal for emotion recognition in affective brain-computer interfaces, offering unique advantages through its high temporal resolution and ability to capture authentic emotional states that cannot be consciously controlled. However, cross-subject generalization remains a fundamental challenge due to individual variability, cognitive traits, and emotional responses. We propose FreqDGT, a frequency-adaptive dynamic graph transformer that systematically addresses these limitations through an integrated framework. FreqDGT introduces frequency-adaptive processing (FAP) to dynamically weight emotion-relevant frequency bands based on neuroscientific evidence, employs adaptive dynamic graph learning (ADGL) to learn input-specific brain connectivity patterns, and implements multi-scale temporal disentanglement network (MTDN) that combines hierarchical temporal transformers with adversarial feature disentanglement to capture both temporal dynamics and ensure cross-subject robustness. Comprehensive experiments demonstrate that FreqDGT significantly improves cross-subject emotion recognition accuracy, confirming the effectiveness of integrating frequency-adaptive, spatial-dynamic, and temporal-hierarchical modeling while ensuring robustness to individual differences. The code is available at https://github.com/NZWANG/FreqDGT.

[07/2025] Two papers is accepted to Neural Networks !

Wenhao Dong*, Yueyang Li*, Weiming Zeng, Lei Chen, Hongjie Yan, Wai Ting Siok, Nizhuan Wang. STARFormer: A Novel Spatio-Temporal Aggregation Reorganization Transformer of FMRI for Brain Disorder Diagnosis. Neural Networks (2025): 107927.

Abstract:

Many existing methods that use functional magnetic resonance imaging (fMRI) to classify brain disorders, such as autism spectrum disorder (ASD) and attention deficit hyperactivity disorder (ADHD), often overlook the integration of spatial and temporal dependencies of the blood oxygen level-dependent (BOLD) signals, ….

Hongyu Chen, Weiming Zeng, Chengcheng Chen, Luhui Cai, Fei Wang, Yuhu Shi, Lei Wang, Wei Zhang, Yueyang Li, Hongjie Yan, Wai Ting Siok, Nizhuan Wang. EEG emotion copilot: Optimizing lightweight LLMs for emotional EEG interpretation with assisted medical record generation. Neural Networks (2025): 107848.

Abstract:

In the fields of affective computing (AC) and brain-computer interface (BCI), the analysis of physiological and behavioral signals to discern individual emotional states has emerged as a critical research frontier. While deep learning-based approaches have made notable strides in EEG emotion recognition, particularly in feature extraction and pattern recognition, significant challenges persist in achieving end-to-end emotion computation, including rapid processing, individual adaptation….